TDM 10100: Project 5 - Manipulating Data

Project Objectives

Motivation: Often data is messy or too big to work with. Knowing how to create subsets and clean up data values is a valuable skill.

Context: We will use a few different datasets to practice data manipulation.

Scope: R, data cleaning, subsets, plots.

Dataset

-

/anvil/projects/tdm/data/flights/subset/2006.csv

-

/anvil/projects/tdm/data/icecream/combined/products.csv

-

/anvil/projects/tdm/data/flights/subset/1997.csv

-

/anvil/projects/tdm/data/8451/The_Complete_Journey_2_Master/5000_transactions.csv (used in the video)

-

/anvil/projects/tdm/data/election/itcont1992.txt (used in the video)

-

/anvil/projects/tdm/data/flights/subset/1992.csv (used in the video)

-

/anvil/projects/tdm/data/donorschoose/Donations.csv (used in the video)

|

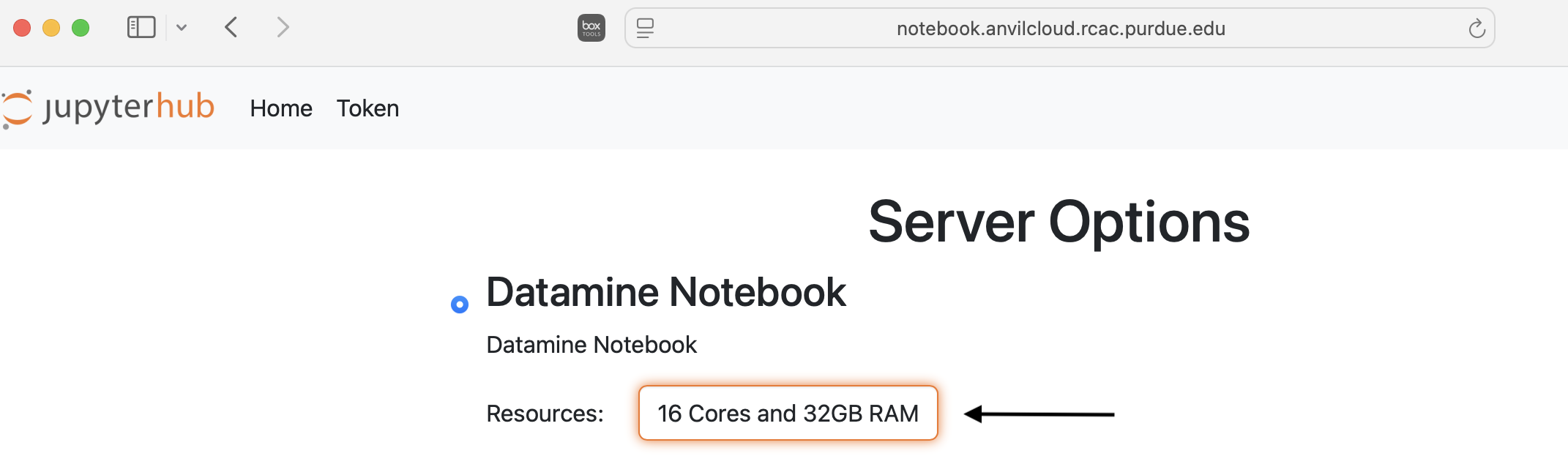

Please use 16 Cores and 32GB RAM for this project: |

Flights

The flights dataset is huge, with files from 1987 to 2023, each with respective subset datasets just to make the data reasonable to work with. The flights data provides numerous opportunities for data exploration with 7,141,922 rows of data from 2006 and 5,411,843 rows from 1997 alone. These subsets contain information about when each flight took place, as well as different factors like how long they took, specifics like flight numbers, and more. There are, of course, empty or messy values, but there is so much data that this does not make too much of an impact for what we will be doing.

There are 29 columns and millions of rows of data. Some of these columns include:

-

Month: numeric month values -

DayofMonth: day of month (1 - (28 up to 31)) -

DayOfWeek: numeric day values -

DepTime: flight departure time in military time shorthand -

ArrTime: flight arrival time in military time shorthand -

AirTime: flight time, in minutes -

Origin: abbreviation values for origin airport -

Dest: abbreviation values for destination airport

Ice Cream Products

The Ice Cream dataset is on the smaller side, containing just a few hundred rows of data. This dataset contains information about four different ice cream brands' products, with product information that would make purchasing easier, such as the description, how the product was rated, and what ingredients it contains.

Some of the columns (description, ingredients) of this dataset are not particularly useful given our current skillset, and can make looking at rows of this data not so easy. Which makes taking specific columns (like the rating column) and isolating them for work ideal.

The rating column is each ice cream’s customer rating on a scale of 0 - 5.

Questions

Question 1 (2 points)

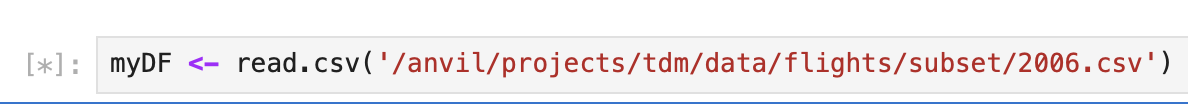

The flights dataset is much too large to work with all at once, so we are going to be working with the 2006 subset. This dataset contains all of the same columns as the other flights datasets, just only containing the rows for which the year value is 2006. Read in the 2006 flights subset data as myDF.

You will notice a star (see below) at the beginning of the code line for this dataset, as it takes some time to load in Anvil due to its large size:

If some of the columns do not show up (they shown as … in the output) when looking at the head() of the dataset, use options(repr.matrix.max.cols=100, repr.matrix.max.rows=100) to set a high maximum number of rows and columns that can be displayed at a time.

Create pa_flights containing only the flights that have a destination of the Philadelphia International Airport (PHL).

Remember, when subsetting in R, we use the myDF[row, column] logic. For instance:

# Example: selecting rows where Food equals "pizza"

pizza <- MyHungryData[MyHungryData$Food == "pizza", ]Following the same approach, you can filter flights with destination "PHL". If you look at the shape of this `pa_flights`, you'll see that this subset still contains many rows of data. `PHL` is one of the most popular airports, with many flights leaving from and arriving there. Show how many unique `Origin` spots there are for flights going to `PHL`.

Using the flights' origins, use tapply() to find the average AirTime for each flight in pa_flights data. Remember to remove NA values (na.rm=TRUE option in tapply()) so this result is meaningful. This output will show the average air time for each unique flight origin, with a destination of PHL.

Make a second temporary dataframe containing the data for flights ending at PHX. Use tapply() to view the average AirTime across each flight origin.

1.1 How many flight origins did PHL have in 2006?

1.2 What was the average AirTime for flights leaving BOS in pa_flights in 2006?

1.3 What was the average AirTime across each Origin for flights ending (myDF$Dest) in PHX in 2006?

Question 2 (2 points)

In question 1, we only used the destination of two selected airports. Here, use the top 20 destinations when comparing each average air time of each origin-and-destination pair.

There is a function names() that will return the names or labels of a search. Use names() and make a new variable top_20 to save the first 20 rows of your sorted table displaying the highest counted number of flight destinations.

table(myDF$Dest) counts how many flights go to each destination.

sort(…, decreasing = TRUE) sorts the destinations so the busiest ones (with the most flights) come first.

head(…, 20) takes only the first 20 from this sorted list.

Finally, names(…) gives you just the destination names from that list.

We already practiced how %in% works: it checks whether a value is included in another set of values.

In this example, we can use %in% to filter myDF so that we only keep the rows where the destination (Dest) is one of the values inside the vector top_20.

This will give you a new data frame, containing only the flights going to those top 20 destinations:

top_dests_df <- myDF[myDF$Dest %in% top_20, ]In tapply() function, we were also able to work with column/variable pairs. For example, we can show the average AirTime across each Origin and Dest pairs for top 20 destination pair as following:

With the tapply() function, we can also work with pairs of columns (variables). For example, we can calculate the average AirTime for each combination of Origin and Dest. Below is how we can do this for the top 20 destinations:

tapply(top_dests_df$AirTime, list(top_dests_df$Origin, top_dests_df$Dest), mean, na.rm=TRUE)You should get a table with rows for the origin, columns for the destination, and values of each pair’s average air time.

2.1 What airports are in top_20?

2.2 Why did we have sort before using names()?

2.3 How do you explain the NA values in the last table with Origin and Destination pairs even after using na.rm=TRUE?

|

Please refer the following videos of Dr. Ward for more exercises using pair of columns in Example 1 Exampl 2 |

Question 3 (2 points)

Read in the ice cream products file from /anvil/projects/tdm/data/icecream/combined/products.csv as ice_cream. View the table of the rating column. This shows the counts of each rating (from 0 to 5), and is helpful, but there is something else we want to find.

To better understand this column and how each ice cream was received, we could add labels to each range of rating. To decide what ranges to use, view the summary of the dataset. The rating column is broken down by

-

Min. -

1st qu. -

Median -

Mean -

3rd Qu. -

Max.

The cut() function in R is used to divide continuous numeric data into "bins". It converts numeric values into factor levels, making it useful for grouping or categorizing data.

|

Please refer the following video of Dr. Ward for more practices with Example 3 The following video shows how to fix one of the common mistakes when using the Example 4 |

Additionally, you can find useful examples on the cut() command here

With ice_cream data, use the cut() command to classify the four rating ranges:

-

"Wouldn't Recommend": 0% - 25% -

"Needs Improvement": 25% - 50% -

"Solid Choice": 50% - 75% -

"Fan Favorite": 75% - 100%

|

Use the More spesifically, you will use |

Wrap the results from the cut in a table().

In the cut() function, add labels corresponding to the quality of the ice cream. Save this as a new column rating_phrases.

3.1 Generate and then show the head() and table() of new rating_phrases column in ice_cream data

3.2 How else would you use the cut() command on the ice cream dataset?

|

For more exercise with Example 5 Example 6 |

Question 4 (2 points)

Read in the 1997 flights subset dataset as my_flights. This dataset has the same structure as the 2006 flights data we used earlier, but it only includes flights from the year 1997.

In this data, the DepTime column tracks what time each flight departs. This column doesn’t display time like we would expect, instead using a range from 1 (00:01am) to 2400 (midnight). There are a lot of different values in this column, but it is OK to print out the table(my_flights$DepTime) to view what sort of times peoples' flights depart.

One way to make this data more readable is to add some set ranges using the cut() command. Like before with the ice cream data, this would allow us to analyze the data based on a smaller number of sets rather than each individual time.

Build a cut() function to break the DepTime column up into sections. Display the table of this to get each groups' number of occurrences.

Using the brakes 0, 300, 600, 900, 1200, 1500, 1800, 2100, 2400 allows for a usable number of groups with evenly spaced ranges of the same size. Having consistently split groups allows for fair analysis later on, though you do not have to use this specific grouping.

Add a corresponding label to each group from the broken up column (for example: night, early morning, etc.), and save this to a new column depart_times. This can be viewed as a table of each label and their respective count, or we could make a plot.

Create a barplot of depart_times. Make sure to include a title and axis labels that make sense for how you have grouped this data. Customize this plot however else you would like to.

|

One thing you may encounter is that the x-axis labels will not all show up. This can generally be fixed by adding |

Going back to the original my_flights dataset, look at the ArrTime column. This can relate some to the DepTime column as this new column tells what time each flight arrived rather than when it departed. Using the same breaks and labels as before, create a new plot showing the counts of each arrival time of the flights.

4.1 Using the departure times barplot, which time of day had the most departure flights?

4.2 Using the arrival times barplot, which time of day had the least arrival flights?

4.3 What is your conclusion as a result of this question?

Question 5 (2 points)

Still within the 1997 flights dataset, there are the columns Month, DayOfWeek, and AirTime. Something interesting we could find is how the day of the week and the month out of the year affect the total air time.

Use subset() to make a saved selection of rows for a specific month. For example:

march <- subset(my_flights, Month == 3)View the table of the DayOfWeek column for this month (march in our example). As expected, the counts of the flights for each day of this month are all within reasonable range of each other.

Do this again, just on all of the months (together) from the original dataframe.

To view the total AirTime for each DayOfWeek, write a tapply() function. Remember to remove NA values.

You should have 7 value categories.

Using tapply(), we’re able to create a more complicated table, where we choose an x-axis and a y-axis and for what values the cells in the table are being calculated. In this case, use AirTime as the values, and show Month and DayOfWeek on the axes, as following:

tapply(my_flights$AirTime, list(my_flights$DayOfWeek, my_flights$Month), sum, na.rm=TRUE)Save this table as flight_times, and make a heatmap showing the results.

|

When making a heatmap, it can sometimes help to use |

Create another table showing the total AirTime across the months, for each day of the month. Make a heatmap of this and compare to your first visualization.

5.1 Which heatmap (day of week or day of month) do you prefer and why?

5.2 What patterns did you find in air times across the months?

5.3 What customizations did you use to make your heatmaps useful?

Submitting your Work

Once you have completed the questions, save your Jupyter notebook. You can then download the notebook and submit it to Gradescope.

-

firstname_lastname_project5.ipynb

|

You must double check your You will not receive full credit if your |