Kubernetes

What is Kubernetes?

The Kubernetes team put together a great overview of the tool. Our page makes similar references as well as expanding on the topics and terms that our team uses most.

Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience of running production workloads at Google, combined with best-of-breed ideas and practices from the community.

Benefits of Kubernetes

Kubernetes provides you with:

-

Service discovery and load balancing. Kubernetes can expose a container using the DNS name or using their own IP address. If traffic to a container is high, Kubernetes is able to load balance and distribute the network traffic so that the deployment is stable.

-

Storage orchestration. Kubernetes allows you to automatically mount a storage system of your choice, such as local storages, public cloud providers, and more.

-

Automated rollouts and rollbacks. You can describe the desired state for your deployed containers using Kubernetes, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers and adopt all their resources to the new container.

-

Automatic bin packing. You provide Kubernetes with a cluster of nodes that it can use to run containerized tasks. You tell Kubernetes how much CPU and memory (RAM) each container needs. Kubernetes can fit containers onto your nodes to make the best use of your resources.

-

Self-healing. Kubernetes restarts containers that fail, replaces containers, kills containers that don’t respond to your user-defined health check, and doesn’t advertise them to clients until they are ready to serve.

-

Secret and configuration management. Kubernetes lets you store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. You can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

Kubernetes Architecture and Components

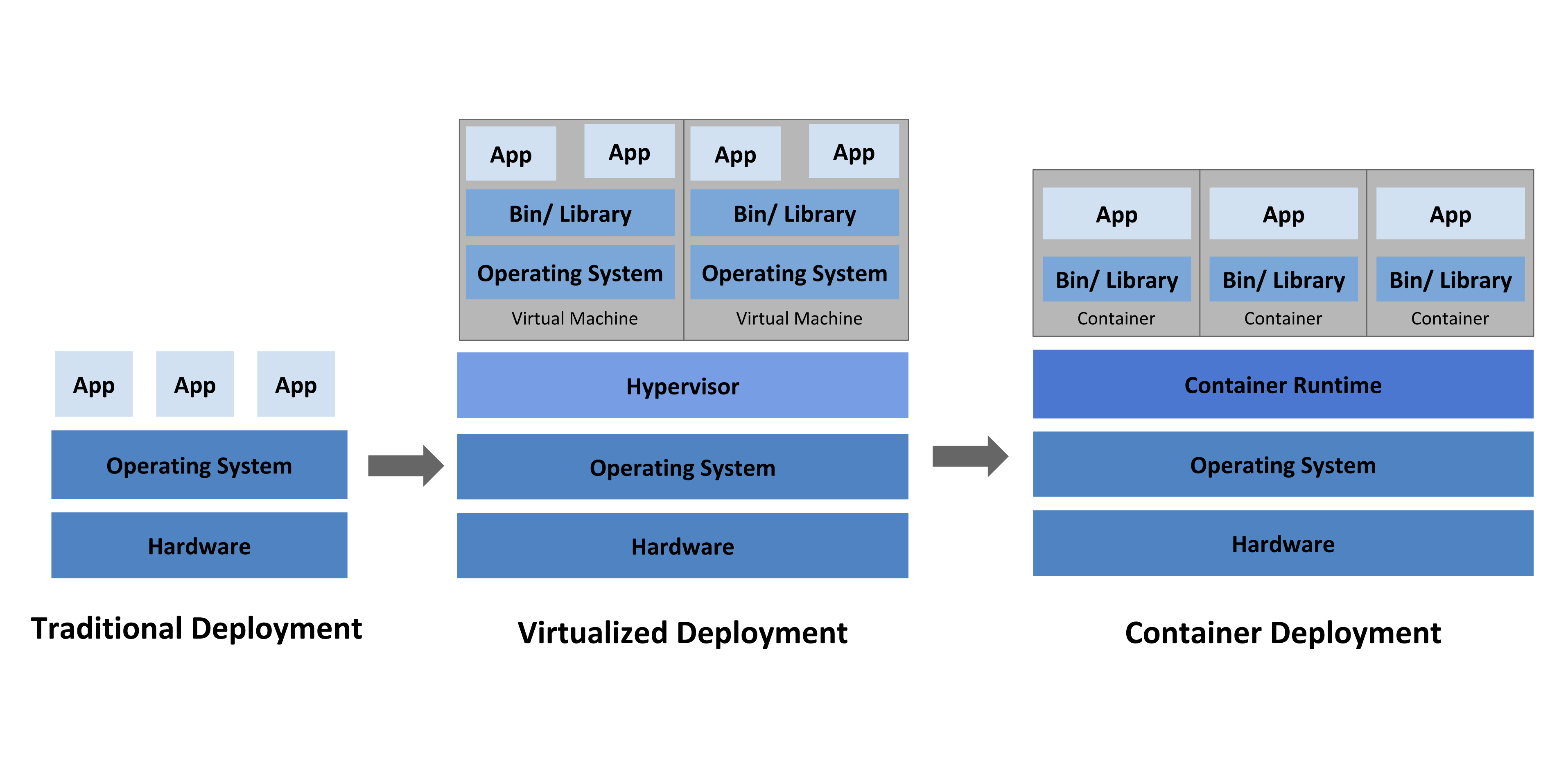

At it’s core Kubernetes orchestrates and deployes containers. It is recommended that you read through the section on containers prior to reading through the remaining Kubernetes document. Once you have a container built and you deploy in Kubernetes, you get a cluster.

A Kubernetes cluster consists of a set of worker machines, called nodes, that run containerized applications. Every cluster has at least one worker node.

The worker node(s) host the Pods that are the components of the application workload. The control plane manages the worker nodes and the Pods in the cluster. In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability.

This document outlines the various components you need to have a complete and working Kubernetes cluster.

Here’s the diagram of a Kubernetes cluster with all the components tied together. The document also defines the individual components in the following section for easy reference.

Terminology

Rancher: An open-source software product with a focus on the management of Kubernetes clusters. Some of the terms below are Rancher specific, but many of the general concepts apply to other types of Kubernetes management environments.

Clusters: A cluster is the highest level of the architecture within Rancher. Clusters contain projects which in turn can contain specific namespaces. Clusters define the compute resources that will be available to the projects and namespaces within them.

Projects: Projects are groups of namespaces. Within Rancher projects allow users to be assigned to specific cluster resources without automatically having access to other projects within the cluster.

Namespaces: Namespaces help to provide scope for image specific names. They can also be utilized for the assignment of resources and, in Rancher, security permissions.

The following resources can be assigned directly to namespaces:

-

Workloads

-

Load Balancers/Ingress

-

Service Discovery Records

-

Persistent Volume Claims

-

Certificates

-

ConfigMaps

-

Registries

-

Secrets

Pods: Pods are one or more containers that share network namespaces and storage volumes. Pods are often single container instances and so the term pod is often synonymous with container.

Workloads: Workloads set the deployment rules for pods/containers. Based on these rules Kubernetes performs deployments and updates to the pod depending on the state of the application.

Services: Services are defined within Rancher and are ways to make the workloads accessible by other workloads or to the outside world. This is usually done via port and IP address.

Secrets: Secrets securely store data such as passwords, tokens, or keys and make them available for use in the Rancher environment.

Volumes: Volumes are persistent storage locations that can be local to a pod/container or shared among multiple containers.

Storage Claims: Persistent storage claims are the processes utilized in Rancher to create and access persistent volumes. They are defined directly in the Volumes section of the Workload menu and can then be used directly when a new workload is created.

Longhorn Storage: Longhorn storage is a type of storage specific to Rancher that is described as lightweight, reliable, and easy-to-use distributed block storage. It can be installed on any Kubernetes cluster utilizing Helm with kubectl or the Rancher UI.

kubectl: kubectl is the set of command line tools that allows users to work with Kubernetes clusters. Additional kubectle documentation can be found in the Resources section below.